Human Error and Human Factors

- Category

- Risk and Safety Theory

- Topic

- Theory and Process

- Type

- Risk Notes

Overview of Issue

Humans have limitations and are fallible, making human error predictable, inevitable and frequent. Fortunately, most human errors have no negative impact, however, when combined with flaws in the system (latent errors), harm can occur. Human factors looks at how humans interact with devices and their work environments and seeks to minimize the risk of error by making these devices and environments more human-centered. Risk managers play a role in building awareness about human error and human factors including how they may impact incidents and appropriate organizational responses.

Key Points

- Human error occurs through unintended and intended actions.

- Analysis of human factors plays a role in understanding how incidents occur.

Things to Consider

Human/Active/Sharp-end Errors

- Humans are the most critical component of the healthcare system and are constantly interacting with one another and other system components.

- Risk managers and incident reviewers need to understand the nature and types of human errors, not in a futile attempt to perfect human performance, but to ensure a complete understanding of how an event unfolded and how it might be prevented or mitigated in the future.

Unintended and Intended Actions

- In general terms, an error involves either doing something wrong (commission) or failing to do the right thing (omission). Various taxonomies describe human error in more detail. A summary is provided below:

- Unintended actions

- Lapses – memory failures (e.g. losing place, skipping steps);

- Slips – attentional failures (e.g. intrusions, omissions, misordering);

- Caused by distractions, interruptions, fatigue, stress;

- Difficult to eliminate; reduce their likelihood through work, device, or environmental redesign.

- Intended actions

- Mistakes

- Insufficient knowledge;

- Application of the wrong rule or heuristic*;

- Caused by lack of experience or insufficient training;

- Reduce likelihood through training, supervision, and interventions to improve diagnostic reasoning.

- Violations (of accepted, appropriate, expected practices and protocols)

- Routine – tacit acceptance over time; generally due to poorly designed processes;

- Situational/optimizing/reasoned – risks and benefits assessed, decision to violate protocol often made to prevent or mitigate harm;

- Reckless – deliberate disregard for protocols, harm foreseeable but not intended**;

- Sabotage – intention to cause harm**

- Risk reduction efforts are based on the type of violation and may include systems redesign, protocol revisions, or proportionate discipline.

* This includes errors made as a result of cognitive biases, particularly in diagnostic processes, including confirmation and availability biases.

** If found, these types of violations would be addressed outside of the incident analysis process.

Person and System Approaches

- There are two approaches or models of human error: person and system.

- The person approach examines the errors of individuals and places blame on the individual for their weaknesses.

- The system approach examines the conditions under which individuals work and attempts to build safeguards to avert errors or mitigate their effects. High reliability organizations model such an approach.

Human Factors

- Human factors may play a role in understanding events and the development of effective recommendations for improvement (e.g. usability testing, heuristic analysis, and device standardization).

- Human factors engineering examines human strengths and limitations in the design of systems that involve people, tools, technology, and work environments to assure safety. The goal is safer systems.

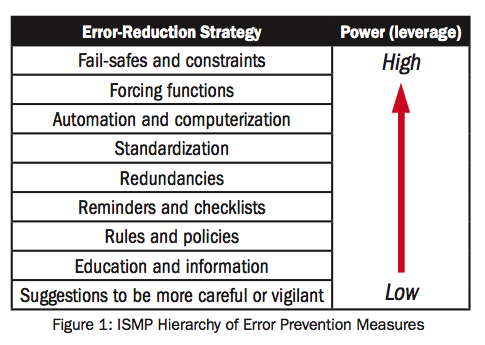

- ISMP’s hierarchy of error prevention measures is based on human factors principles. Refer to Figure 1.

References

- HIROC. (2015). Critical incidents and multi-patient events. Risk Resource Guide.

- Agency for Healthcare Research and Quality Patient Safety Net. (2016). Human factors engineering. Patient Safety Primer.

- Canadian Patient Safety Institute. (2012). Canadian incident analysis framework.

- ECRI Institute. (2011). System safety analysis. Healthcare Risk Control, Supplement A.

- Healthcare Performance Improvement (HPI). (2009). SEC & SSER patient safety measurement system for healthcare. HPI White Paper Series.

- Institute for Safe Medication Practices. (2006). Selecting the best error-prevention “tools” for the job. Community/Ambulatory Care ISMP Medication Safety Alert.

- National Patient Safety Agency. (2003). Root cause analysis toolkit.

- Reason, J. (2000). Human error: Models and management. BMJ, 320:768-70.

- Wachter R. (2012). Understanding patient safety (2nd Ed.) McGraw-Hill.